1.

Limitations of Current RAG Systems for LLMs

Performed a detailed analysis to understand the limitations of the existing RAG systems, and identified and provided concrete examples of failure cases, along with future avenues of improvement. Examples included managing unrelated noise from external sources, handling mathematical reasoning, effectively integrating information, interpreting negative or missing statements, dealing with conflicting knowledge, and the difficulty in evaluations.

2.

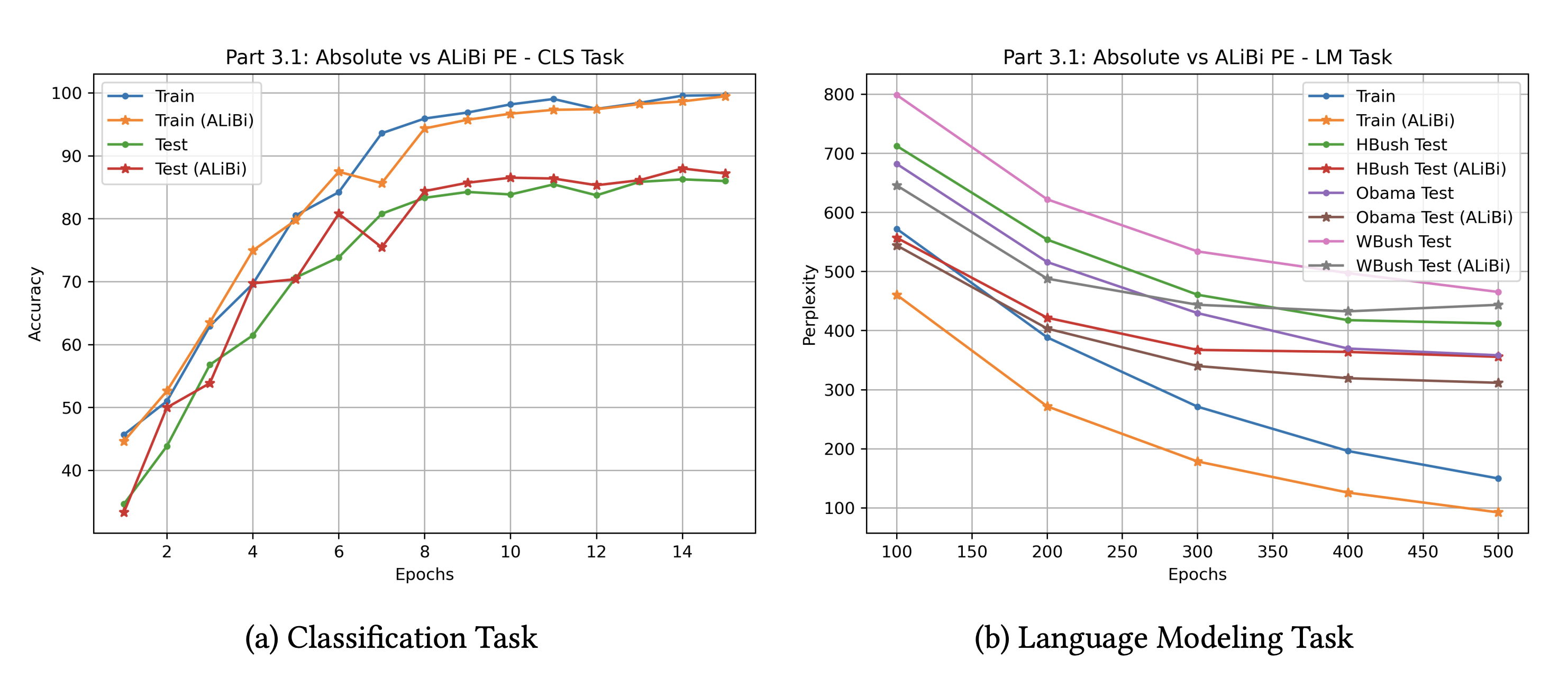

Transformer-based Language Modeling and Classification

Implemented a custom transformer encoder and feedforward classifier from scratch, achieving 86.2% accuracy in political speech text classification. Pretrained a GPT-like transformer decoder, achieving a language model perplexity of 149.8 (out of a vocabulary of 5755) on political speeches. Enhanced model performance by further reducing perplexity by 38.2% using ALiBi for positional encoding and by 52% using Xavier/Normal initialization techniques.

3.

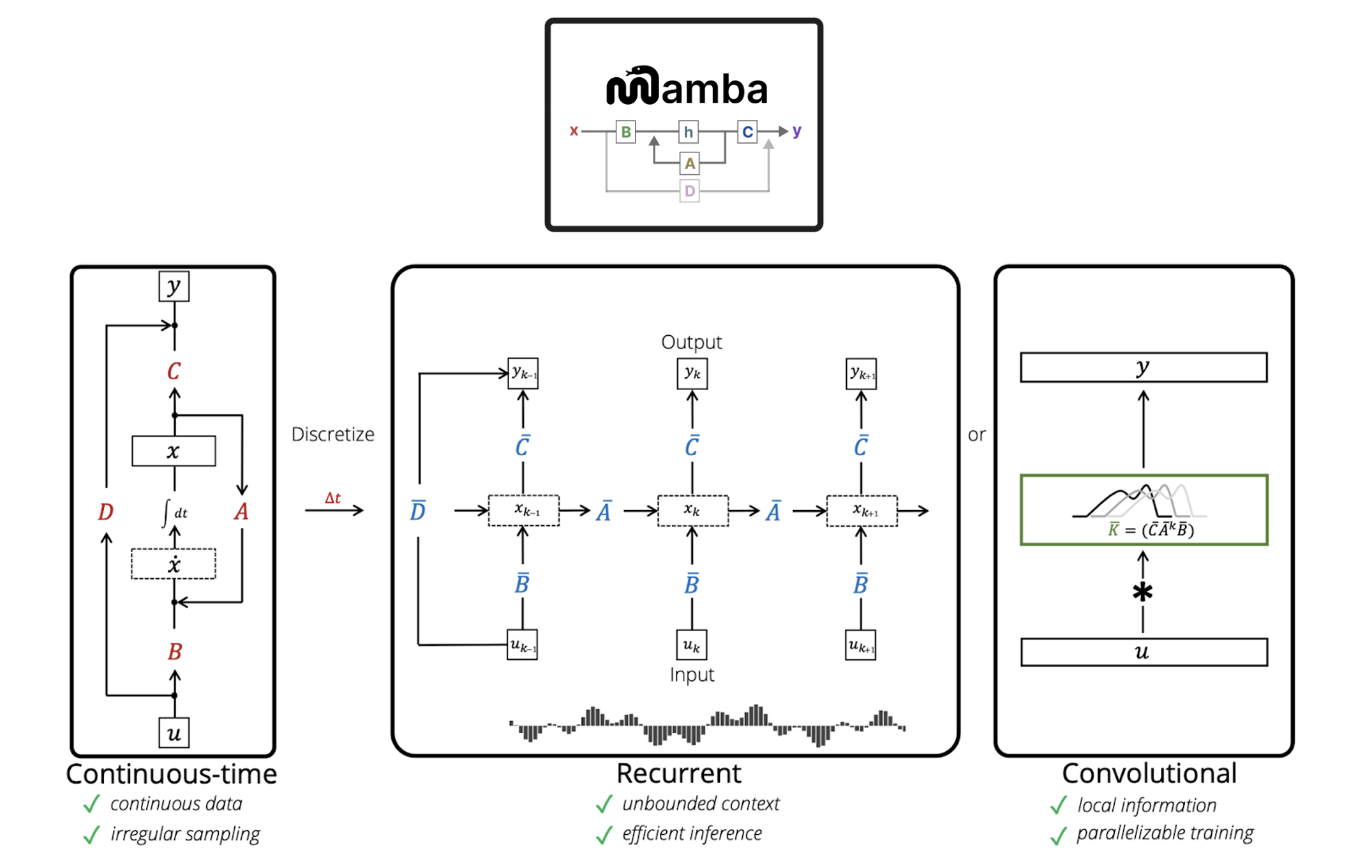

An Overview of the Mamba State Space Model

Investigated limitations of subquadratic-time architectures in handling long sequences, focusing on the lack of content-based reasoning. Performed a case study on Mamba, a novel neural network architecture integrating selective structured state space models (SSMs) to address this limitation. Mamba demonstrates improved performance on language processing tasks compared to existing subquadratic-time architectures.

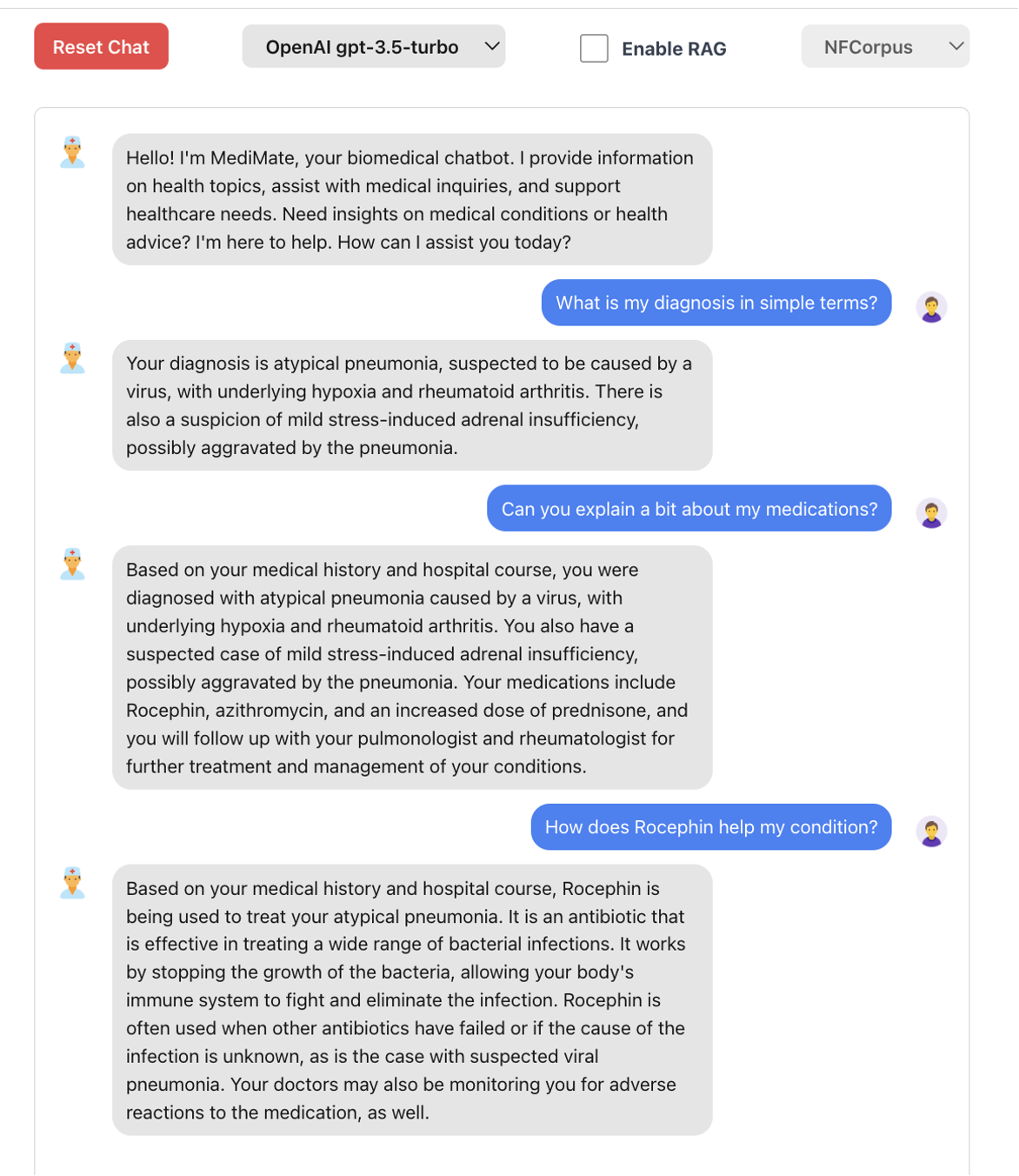

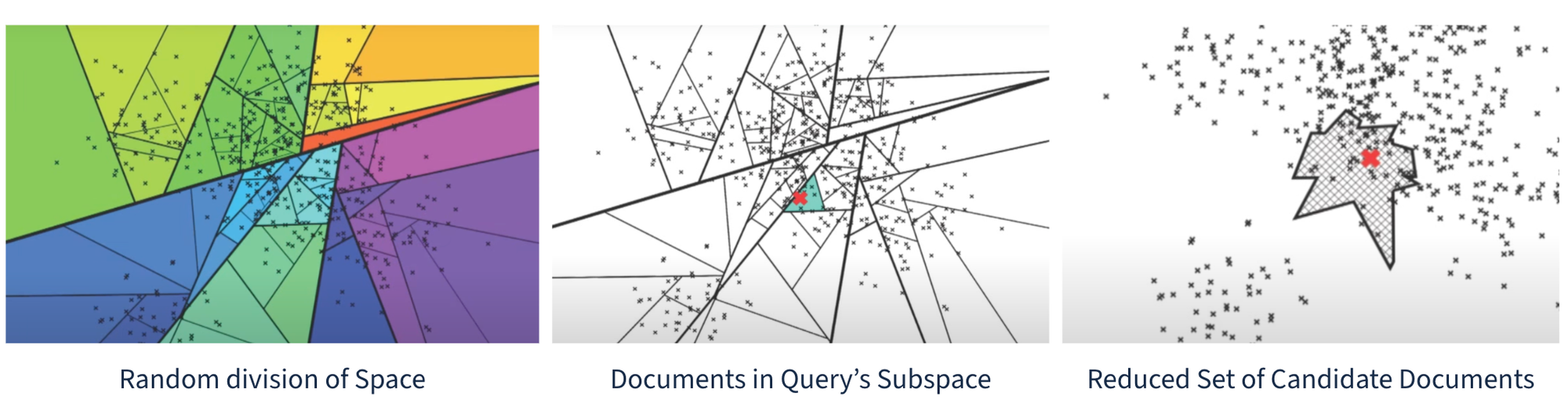

Showcased enhanced BLEU and ROUGE scores through the application of Retrieval Augmented Generation (RAG) on OpenAI’s LLM for closed-domain question answering in the field of medicine. Employed OpenAI Embeddings, FAISS Vector DBMS, and Flask for a web app which supports multi-modal input for patient interactions.

5.

Closed Domain Question Answering

Achieved enhanced precision, recall, and computational efficiency by applying Vector DBMS to Retrieval Augmented Generation (RAG) on OpenAI’s LLM across diverse datasets. Compared our approach with Latent Semantic Indexing and Singular Value Decomposition, demonstrating superior performance.

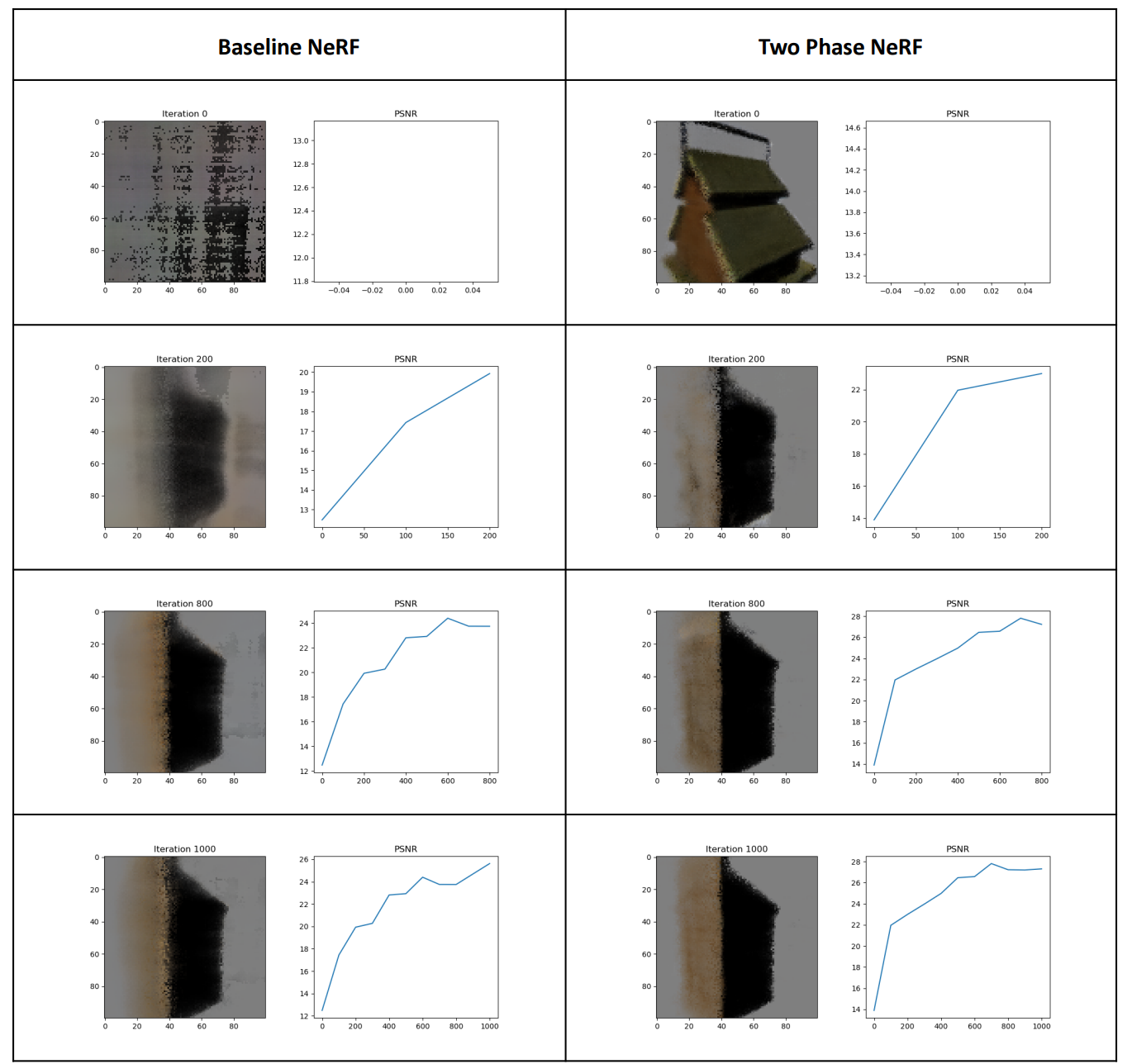

Accelerated NeRF reconstruction time by up to 40% through the utilization of a pre-trained NeRF model, originally trained on objects from the

ShapeNet dataset, for downstream training on the target object.

7.

Neural Rendering for 3D Reconstruction and View Synthesis

Explored advancements in neural rendering for 3D reconstruction and view synthesis, focusing on techniques driven by neural networks. Reviewed datasets, evaluation metrics, and the evolution from classical methods to Neural Radiance Fields (NeRF). Discussed NeRF fundamentals, efficiency, sparse data handling, dynamic scenes, composition, and application-specific NeRFs, with an outlook on future research directions.

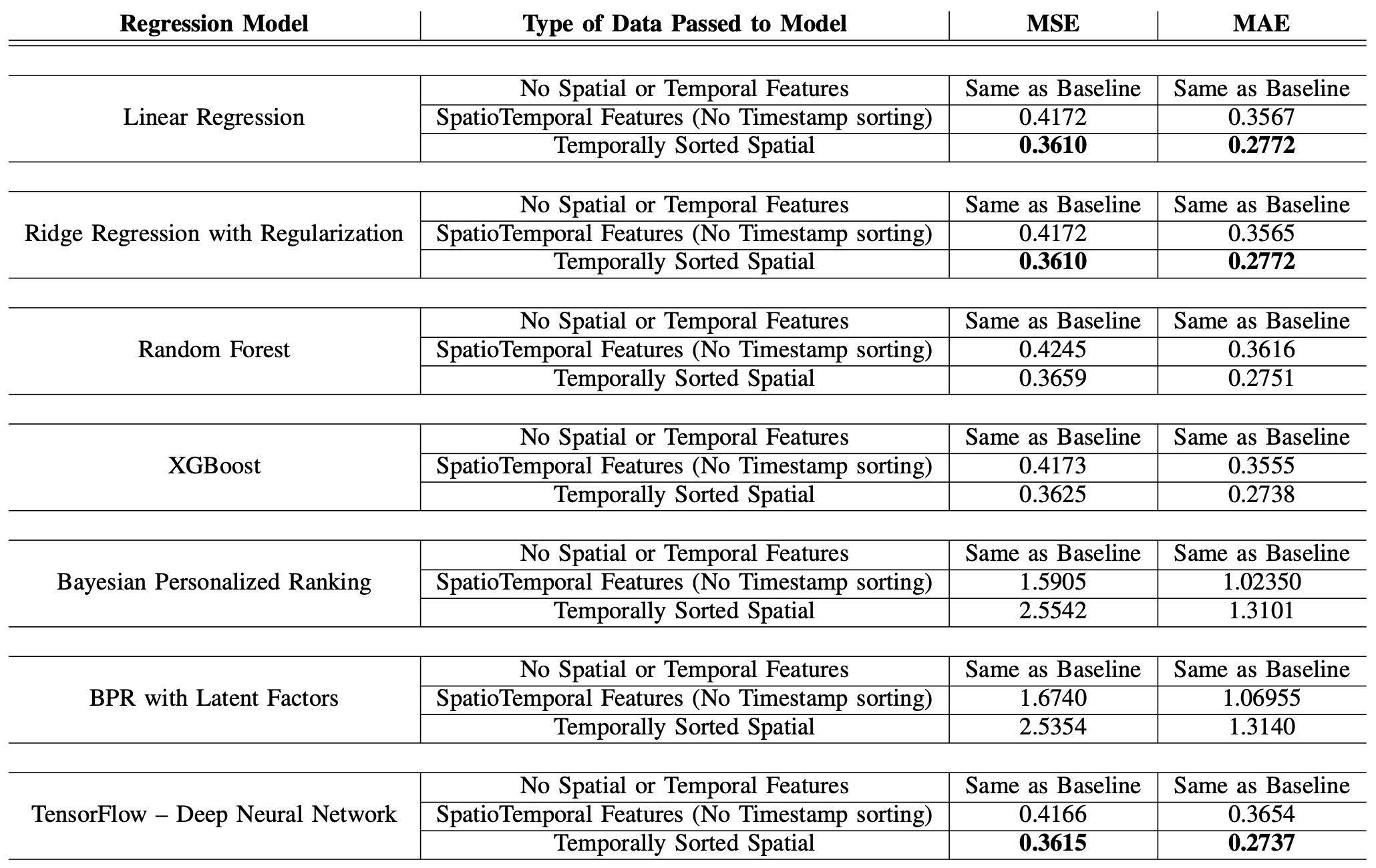

Explored machine/deep learning regression models on the

Google Local Reviews dataset, highlighting spatiotemporal data’s impact on user ratings.

9.

Rumour Stance Classification of Tweets

Explored machine/deep learning classification models on the SemEval-2017 Task 8 dataset to classify tweets based on their stance into four categories: supporting (S), denying (D), querying (Q), or commenting (C).

10.

Efficient Meme Retrieval System

Built a GUI-based Search Engine that retrieves a set of memes from a database based on user query.

11.

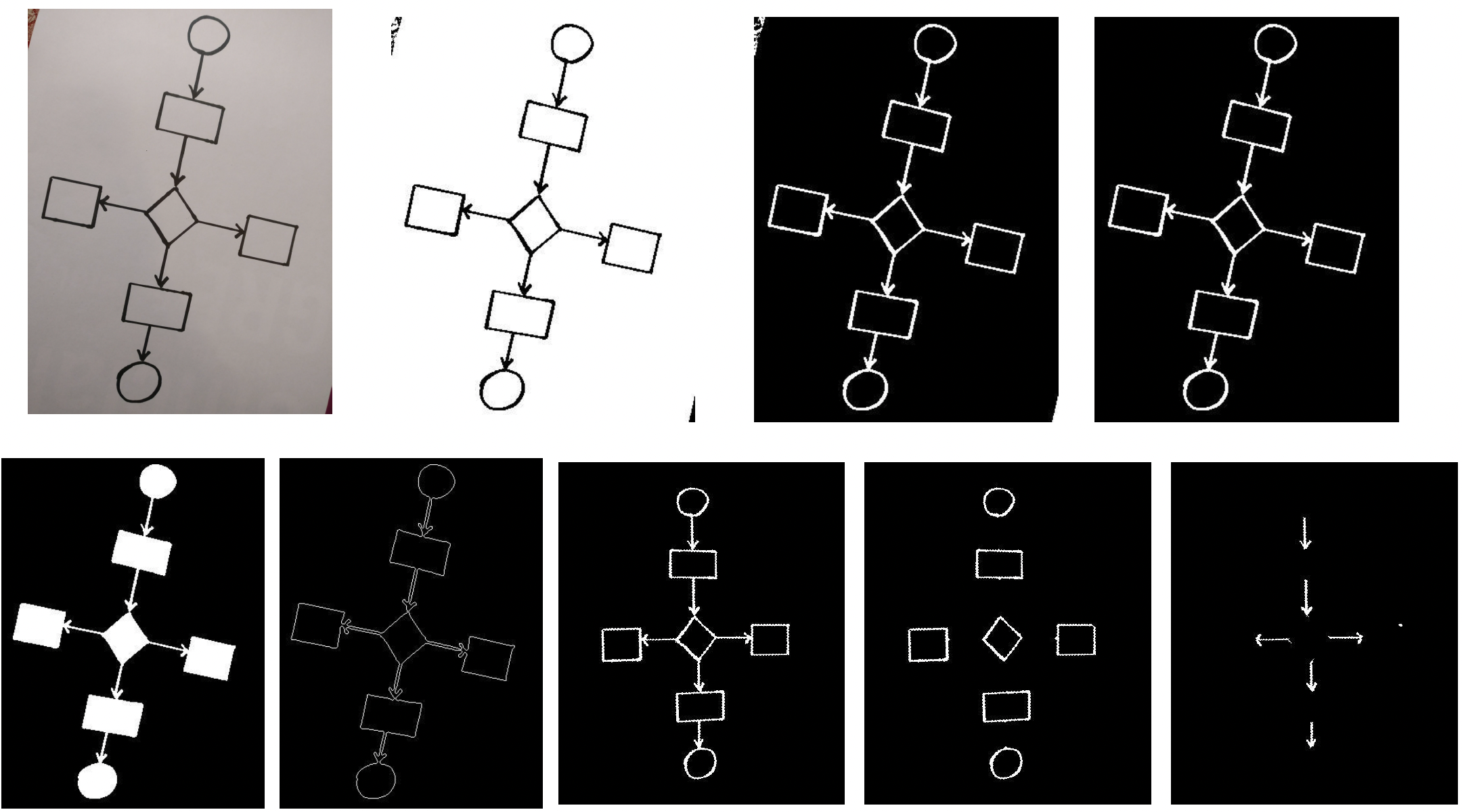

Automatic Handwritten Flowchart Converter

Developed a model that constructs digital image of a handwritten flowchart using image-processing techniques.

12.

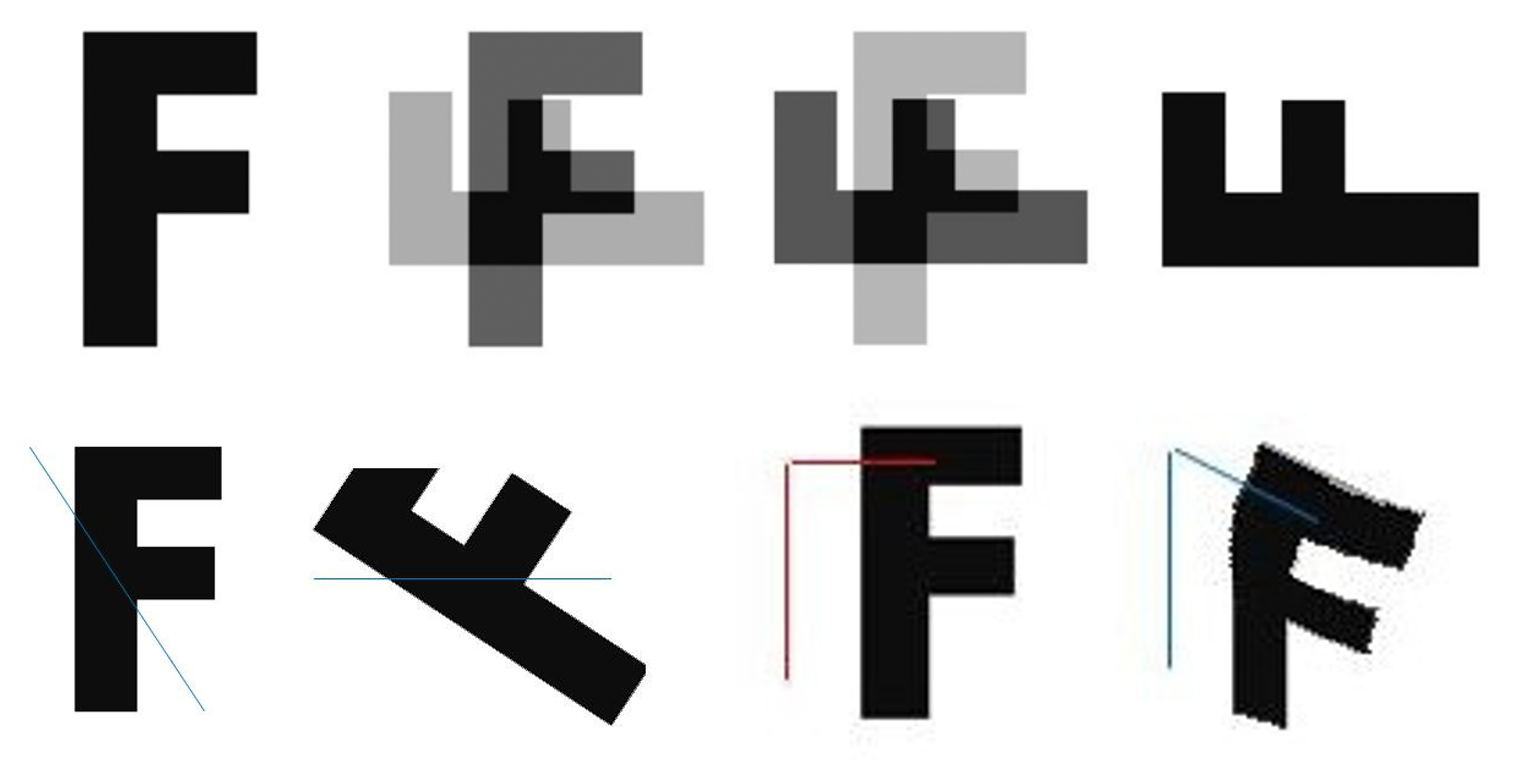

Feature-Based Image Metamorphosis

Designed a visual animation tool that transforms one image to another in a smooth fashion.